Getting Started With (APL)

In this tutorial, you'll explore how to use APL in Axiom Data Explorer to run queries using Tabular Operators, Scalar Functions, and Aggregation Functions.

Prerequisites

- Sign up and log in to Axiom Account

- Ingest data into your dataset or you can run queries on our Play Sandbox

Overview of APL

Every query, starts with a Dataset embedded in square brackets, with the starting expression being a tabular operator statement. The query's tabular expression statements produce the results of the query.

Before you can start writing tabular operators or any function, the pipe (|) delimeter starts the query statements as they flow from one function to another.

Commonly used Operators

To run queries on each function or operator in this tutorial, click the Run in Playground button.

summarize: Produces a table that aggregates the content of the dataset.

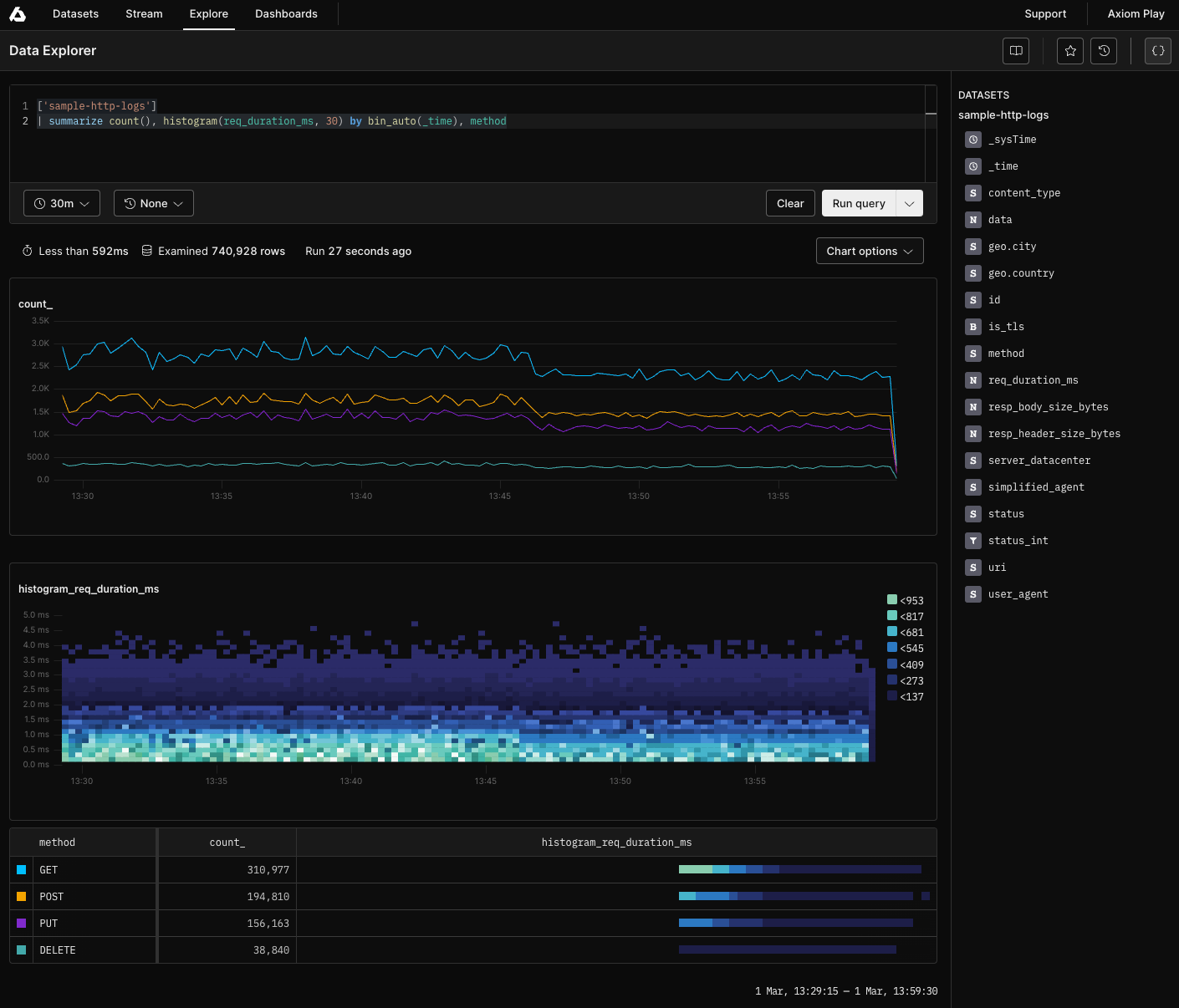

The following query returns the count of events by time

['github-push-event']

| summarize count() by bin_auto(_time)You can use the aggregation functions with the summarize operator to produce different columns.

Top 10 GitHub push events by maximum push id

['github-push-event']

| summarize max_if = maxif(push_id, true) by size

| top 10 by max_if descDistinct City count by server datacenter

['sample-http-logs']

| summarize cities = dcount(['geo.city']) by server_datacenterThe result of a summarize operation has:

-

A row for every combination of by values

-

Each column named in by

-

A column for each expression

where: Filters the content of the dataset that meets a condition when executed.

The following query filters the data by method and content_type:

['sample-http-logs']

| where method == "GET" and content_type == "application/octet-stream"

| project method , content_typecount: Returns the number of events from the input dataset.

['sample-http-logs']

| count['sample-http-logs']

| summarize count() by bin_auto(_time)project: Selects a subset of columns.

['sample-http-logs']

| project content_type, ['geo.country'], method, resp_body_size_bytes, resp_header_size_bytestake: Returns up to the specified number of rows.

['sample-http-logs']

| take 100The limit operator is an alias to the take operator.

['sample-http-logs']

| limit 10Scalar Functions

parse_json()

The following query extracts the JSON elements from an array:

['sample-http-logs']

| project parsed_json = parse_json( "config_jsonified_metrics")replace_string(): Replaces all string matches with another string.

['sample-http-logs']

| extend replaced_string = replace_string( "creator", "method", "machala" )

| project replaced_stringsplit(): Splits a given string according to a given delimiter and returns a string array.

['sample-http-logs']

| project split_str = split("method_content_metrics", "_")

| take 20strcat_delim(): Concatenates a string array into a string with a given delimiter.

['sample-http-logs']

| project strcat = strcat_delim(":", ['geo.city'], resp_body_size_bytes)indexof(): Reports the zero-based index of the first occurrence of a specified string within the input string.

['sample-http-logs']

| extend based_index = indexof( ['geo.country'], content_type, 45, 60, resp_body_size_bytes ), specified_time = bin(resp_header_size_bytes, 30)Regex Examples

['sample-http-logs']

| project remove_cutset = trim_start_regex("[^a-zA-Z]", content_type )Finding logs from a specific City

['sample-http-logs']

| where tostring(geo.city) matches regex "^Camaquã$"Identifying logs from a specific user agent

['sample-http-logs']

| where tostring(user_agent) matches regex "Mozilla/5.0"Finding logs with response body size in a certain range

['sample-http-logs']

| where toint(resp_body_size_bytes) >= 4000 and toint(resp_body_size_bytes) <= 5000Finding logs with user agents containing Windows NT

['sample-http-logs']

| where tostring(user_agent) matches regex @"Windows NT [\d\.]+"Finding logs with specific response header size

['sample-http-logs']

| where toint(resp_header_size_bytes) == 31Finding logs with specific request duration

['sample-http-logs']

| where toreal(req_duration_ms) < 1Finding logs where TLS is enabled and method is POST

['sample-http-logs']

| where tostring(is_tls) == "true" and tostring(method) == "POST"Array functions

array_concat(): Concatenates a number of dynamic arrays to a single array.

['sample-http-logs']

| extend concatenate = array_concat( dynamic([5,4,3,87,45,2,3,45]))

| project concatenatearray_sum(): Calculates the sum of elements in a dynamic array.

['sample-http-logs']

| extend summary_array=dynamic([1,2,3,4])

| project summary_array=array_sum(summary_array)Conversion functions

todatetime(): Converts input to datetime scalar.

['sample-http-logs']

| extend dated_time = todatetime("2026-08-16")dynamic_to_json(): Converts a scalar value of type dynamic to a canonical string representation.

['sample-http-logs']

| extend dynamic_string = dynamic_to_json(dynamic([10,20,30,40 ]))String Operators

We support various query string, logical and numerical operators.

In the query below, we use the contains operator, to find the strings that contain the string -bot and [bot]:

['github-issue-comment-event']

| extend bot = actor contains "-bot" or actor contains "[bot]"

| where bot == true

| summarize count() by bin_auto(_time), actor

| take 20['sample-http-logs']

| extend user_status = status contains "200" , agent_flow = user_agent contains "(Windows NT 6.4; AppleWebKit/537.36 Chrome/41.0.2225.0 Safari/537.36"

| where user_status == true

| summarize count() by bin_auto(_time), status

| take 15Hash Functions

-

hash_md5(): Returns an MD5 hash value for the input value.

-

hash_sha256(): Returns a sha256 hash value for the input value.

-

hash_sha1(): Returns a sha1 hash value for the input value.

['sample-http-logs']

| extend sha_256 = hash_md5( "resp_header_size_bytes" ), sha_1 = hash_sha1( content_type), md5 = hash_md5( method), sha512 = hash_sha512( "resp_header_size_bytes" )

| project sha_256, sha_1, md5, sha512List all unique groups

['sample-http-logs']

| distinct ['id'], is_tlsCount of all events per service

['sample-http-logs']

| summarize Count = count() by server_datacenter

| order by Count descChange the time clause

['github-issues-event']

| where _time == ago(1m)

| summarize count(), sum(['milestone.number']) by _time=bin(_time, 1m)Rounding functions

-

floor(): Calculates the largest integer less than, or equal to, the specified numeric expression.

-

ceiling(): Calculates the smallest integer greater than, or equal to, the specified numeric expression.

-

bin(): Rounds values down to an integer multiple of a given bin size.

['sample-http-logs']

| extend largest_integer_less = floor( resp_header_size_bytes ), smallest_integer_greater = ceiling( req_duration_ms ), integer_multiple = bin( resp_body_size_bytes, 5 )

| project largest_integer_less, smallest_integer_greater, integer_multipleTruncate decimals using round function

['sample-http-logs']

| project rounded_value = round(req_duration_ms, 2)Truncate decimals using floor function

['sample-http-logs']

| project floor_value = floor(resp_body_size_bytes), ceiling_value = ceiling(req_duration_ms)HTTP 5xx responses (day wise) for the last 7 days - one bar per day

['sample-http-logs']

| where _time > ago(7d)

| where req_duration_ms >= 5 and req_duration_ms < 6

| summarize count(), histogram(resp_header_size_bytes, 20) by bin(_time, 1d)

| order by _time descImplement a remapper on remote address logs

['sample-http-logs']

| extend RemappedStatus = case(req_duration_ms >= 0.57, "new data", resp_body_size_bytes >= 1000, "size bytes", resp_header_size_bytes == 40, "header values", "doesntmatch")Advanced aggregations

In this section, you will learn how to run queries using different functions and operators.

['sample-http-logs']

| extend prospect = ['geo.city'] contains "Okayama" or uri contains "/api/v1/messages/back"

| extend possibility = server_datacenter contains "GRU" or status contains "301"

| summarize count(), topk( user_agent, 6 ) by bin(_time, 10d), ['geo.country']

| take 4Searching map fields

['otel-demo-traces']

| where isnotnull( ['attributes.custom'])

| extend extra = tostring(['attributes.custom'])

| search extra:"0PUK6V6EV0"

| project _time, trace_id, name, ['attributes.custom']Configure Processing rules

['sample-http-logs']

| where _sysTime > ago(1d)

| summarize count() by methodReturn different values based on the evaluation of a condition

['sample-http-logs']

| extend MemoryUsageStatus = iff(req_duration_ms > 10000, "Highest", "Normal")Compute time between two log entries

['sample-http-logs']

| where content_type == 'text/html'

| project start=_time, id=['id']Working with different operators

['hn']

| extend superman = text contains "superman" or title contains "superman"

| extend batman = text contains "batman" or title contains "batman"

| extend hero = case(

superman and batman, "both",

superman, "superman ", // spaces change the color

batman, "batman ",

"none")

| where (superman or batman) and not (batman and superman)

| summarize count(), topk(type, 3) by bin(_time, 30d), hero

| take 10['sample-http-logs']

| summarize flow = dcount( content_type) by ['geo.country']

| take 50Get the JSON into a property bag using parse-json

example

| where isnotnull(log)

| extend parsed_log = parse_json(log)

| project service, parsed_log.level, parsed_log.messageGet average response using project keep function

['sample-http-logs']

| where ['geo.country'] == "United States" or ['id'] == 'b2b1f597-0385-4fed-a911-140facb757ef'

| extend systematic_view = ceiling( resp_header_size_bytes )

| extend resp_avg = cos( resp_body_size_bytes )

| project-away systematic_view

| project-keep resp_avg

| take 5Combine multiple percentiles into a single chart in APL

['sample-http-logs']

| summarize percentiles_array(req_duration_ms, 50, 75, 90) by bin_auto(_time)Combine mathematical functions

['sample-http-logs']

| extend tangent = tan( req_duration_ms ), cosine = cos( resp_header_size_bytes ), absolute_input = abs( req_duration_ms ), sine = sin( resp_header_size_bytes ), power_factor = pow( req_duration_ms, 4)

| extend angle_pi = degrees( resp_body_size_bytes ), pie = pi()

| project tangent, cosine, absolute_input, angle_pi, pie, sine, power_factor['github-issues-event']

| where actor !endswith "[bot]"

| where repo startswith "kubernetes/"

| where action == "opened"

| summarize count() by bin_auto(_time)Change global configuration attributes

['sample-http-logs']

| extend status = coalesce(status, "info")Set defualt value on event field

['sample-http-logs']

| project status = case(

isnotnull(status) and status != "", content_type, // use the contenttype if it's not null and not an empty string

"info" // default value

)Extracting payment amount details from trace Data

['otel-demo-traces']

| extend amount = ['attributes.custom']['app.payment.amount']

| where isnotnull( amount)

| project _time, trace_id, name, amount, ['attributes.custom']Filtering GitHub issues by label identifier

['github-issues-event']

| extend data = tostring(labels)

| where labels contains "d73a4a"Aggregating trace Counts by HTTP method

['otel-demo-traces']

| extend httpFlavor = tostring(['attributes.custom'])

| summarize Count=count() by ['attributes.http.method']